A Progressive Transformer for Unifying Binary Code Embedding and Knowledge Transfer

May 21, 2025· ,,,,·

0 min read

,,,,·

0 min read

Hanxiao Lu

Hongyu Cai

Yiming Liang

Antonio Bianchi

Z. Berkay Celik

Image credit: Unsplash

Image credit: UnsplashAbstract

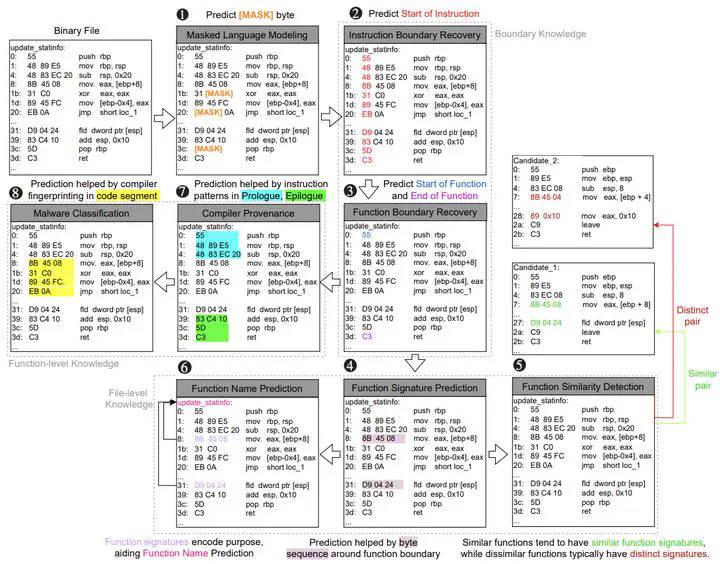

Language models have recently been applied to binary analysis tasks, such as function similarity detection and function signature recovery. These models typically employ a two-stage training process: pre-training via Masked Language Modeling (MLM) on machine code and fine-tuning for specific tasks. While MLM helps to understand binary code structures, it ignores essential code characteristics, including control and data flow, which negatively affect model generalization. Recent work leverages domain-specific features (e.g., control flow graphs and dynamic execution traces) in transformer-based approaches to improve binary code semantic understanding. This approach, however, involves complex feature engineering, a cumbersome and time-consuming process that can introduce predictive uncertainty when dealing with stripped or obfuscated code, which leads to a performance drop. We introduce PROTST, a novel transformer-based methodology for binary code embedding. PROTST employs a hierarchical training process based on a unique tree-like structure, where knowledge progressively flows from fundamental tasks at the root to more specialized tasks at the leaves. This progressive teacher-student paradigm allows the model to build upon previously learned knowledge, resulting in high-quality embeddings that can be effectively leveraged for diverse downstream binary analysis tasks. The effectiveness of PROTST is evaluated in seven binary analysis tasks, demonstrating an average of 14.8% improvement in F1 and MRR compared to traditional two-stage training, and a 16.6% improvement when analyzing obfuscated code.

Type

Publication

In IEEE International Conference on Software Analysis, Evolution and Reengineering, 2025