Membership and Memorization in LLM Knowledge Distillation

Jun 17, 2025·, ,,·

0 min read

,,·

0 min read

Ziqi Zhang

Ali Shahin Shamsabadi

Hanxiao Lu

Yifeng Cai

Hamed Haddadi

Image credit: Unsplash

Image credit: UnsplashAbstract

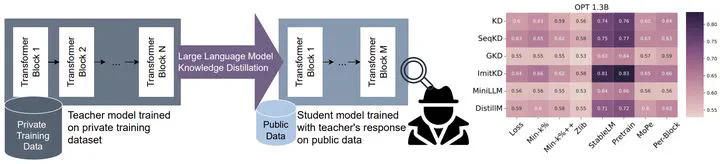

Recent advances in Knowledge Distillation (KD) aim to mitigate the high computational demands of Large Language Models (LLMs) by transferring knowledge from a large ‘’teacher’’ to a smaller ‘‘student’’ model. However, students may inherit the teacher’s privacy when the teacher is trained on private data. In this work, we systematically characterize and investigate membership privacy risks inherent in six LLM KD techniques. Using instruction-tuning settings that span seven NLP tasks, together with three teacher model families (GPT-2, LLAMA-2, and OPT), and various size student models, we demonstrate that all existing LLM KD approaches carry membership and memorization privacy risks from the teacher to its students. However, the extent of privacy risks varies across different KD techniques. We systematically analyse how key LLM KD components (KD objective functions, student training data and NLP tasks) impact such privacy risks. We also demonstrate a significant disagreement between memorization and membership privacy risks of LLM KD techniques. Finally, we characterize per-block privacy risk and demonstrate that the privacy risk varies across different blocks by a large margin.

Type

Publication

In Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing